Listening: 2020 in Review

December 6, 2020

It's been a great year in listening to music for me. This is mostly due to the fact that I've been working from my home office, so I can play music on my stereo all day. Spotify was kind enough to tell me what albums I listened to most in 2020, so I thought I'd share them here along with some reflections. Writing up this blog post has given me a lot of gratitude for the listening experiences I was fortunate enough to have this year.

Project: Music Generator

November 29, 2020

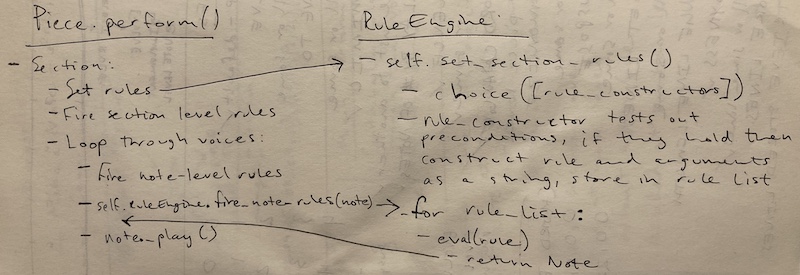

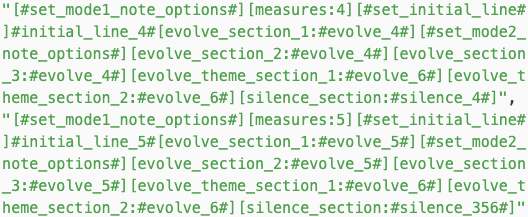

In my last post I talked about generating a Csound score with Tracery. That was a rewarding exercise, but Tracery isn't an ideal language to continue developing a music generator with. I decided to reimplement the music generator entirely in Python in order to take advantage of handy things like lists, conditional statements, functions, and classes. It all came together quicker than I expected, and I was even able to release an album of tracks showcasing the system.

Project: Grammar Music

November 9, 2020

In my previous post I talked about using Tracery to write grammars which generate text. In this post I'll talk about using Tracery to generate Csound scores instead.

Update: CS 318

October 18, 2020

This fall I've been sitting in on a computer science course at Carleton called CS 318: Computational Media, taught by a visiting professor named James Ryan. This course focuses on new forms of creative expression and media that can only be made with computers. Examples include computer-generated poetry and novels, Twitter bots, computer-generated visual art, and virtual reality works.

Update: The Last Four Months

July 25, 2020It’s been a while since my last post. Here’s an update on what I’ve been up to the last four months.

Not Doing Csound

I basically stopped working on my Csound projects when I started auditing a computer science course in March (see below). There were two reasons for taking this break from Csound.

- The computer science course took up most of my free time and brain space.

- I had been going down the rabbit hole of frequency modulation (FM) synthesis and was getting overwhelmed. In particular, I was trying to emulate the Yamaha TX81Z synthesizer, which is what Mark Fell used on his Multistability album. However, emulating the TX81Z in Csound is not a simple project. I had gotten in over my head, so I took a little break. I've recently picked this project back up, and I hope to post about it soon.

Study: Mark Fell - Rhythms

April 3, 2020

I was drawn to the work of Mark Fell this past fall when I was exploring rhythmic pattern generation and FM synthesis. In particular, I was blown away by Fell’s collaboration with Gábor Lázár from 2015 called The Neurobiology of Moral Decision Making.

I wanted to know how Fell and Lázár made these rhythms and sounds. A Google search landed me on this thread in the lines forum where I learned that Fell completed a PhD thesis in 2013 called Works in Sound and Pattern Synthesis. It turns out this thesis provides detailed discussions of Fell’s rhythmic pattern and synthesis algorithms. I decided to implement Fell’s ideas in Csound just like I did with James Tenney’s ideas.

Project: Drum Machine

March 16, 2020I can’t remember why I decided to build a drum machine in Csound. After spending a few weeks on the James Tenney instrument, which generates notes in a very randomized way, I must have been interested in generating notes within a metrical framework. In other words, I wanted to learn how to use the metro opcode.

But first, why would you build a drum machine when there are dozens of free, full-featured drum machines available online? Two reasons:

- You’ll learn a lot about Csound by building one yourself.

- You can custom code the drum machine to do anything you’d like.

Study: James Tenney

March 8, 2020

I like to study the writings of computer music practitioners and try to implement their ideas in Csound. This expands my Csound skillset and exposes me to different compositional strategies. For example, I spent a few weeks this fall closely reading composer James Tenney’s essay “Computer Music Experiences, 1961-1964,” published in 1969. This essay documents Tenney’s experiences working with the MUSIC-N computer synthesis program at Bell Labs from September 1961 to March 1964.

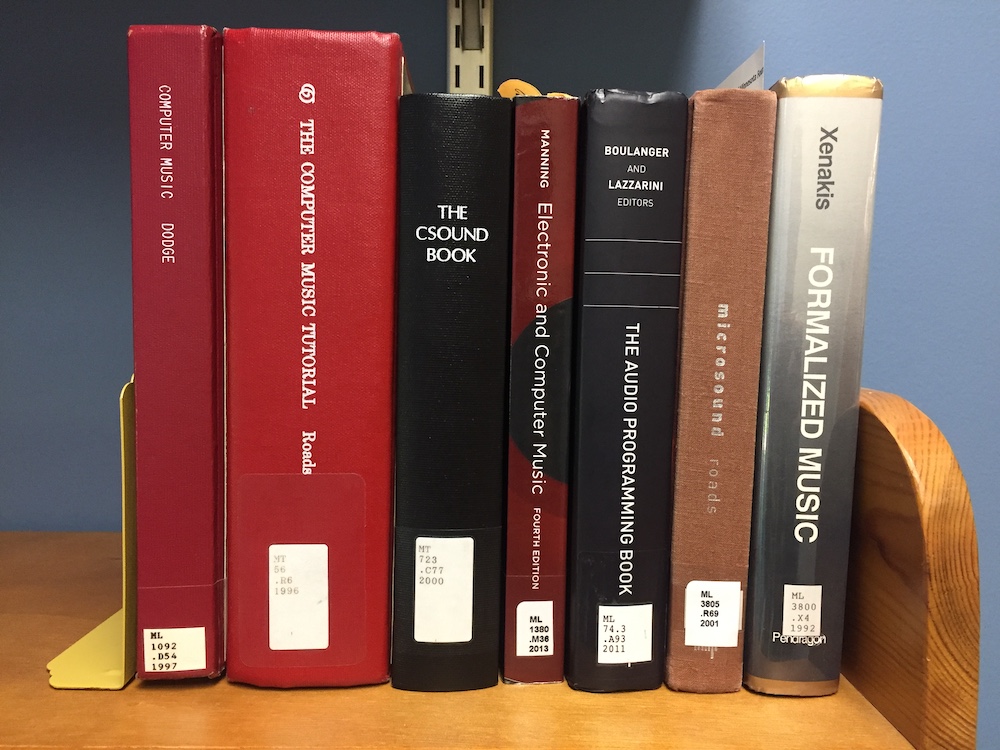

Helpful Resources for Csound

February 3, 2020

Even with the best documentation and most supportive user community Csound would still be difficult for anyone to learn. That’s because digital audio generation and processing is hard to grasp conceptually. It has been for me anyway. Tremendously hard.

The Mysterious World of Csound

October 6, 2019Six months ago when I started to learn Csound I struggled to wrap my head around what exactly it was and what people were doing with it. At the most basic level I knew it could be used to make strange sounds, but even after I read online documentation, read the first chapters of Csound textbooks, and watched YouTube videos, I still had unanswered questions.

- How many people are using Csound?

- What are they doing with it?

- Where can you find it under the hood in software/hardware applications?

- Is this a ubiquitous language in the music world, or is it an obscure language only used by a cultish group of practioners?